คำนำ

เนื่องจากองค์กรต่างๆ เร่งนำ GenAI มาใช้มากขึ้น การ เลือกสถาปัตยกรรมทางเทคนิคที่เหมาะสมจึงกลายเป็นการตัดสินใจเชิงกลยุทธ์ที่สำคัญ การเลือกที่ถูกต้องขึ้นอยู่กับปัจจัยสำคัญหลายประการ เช่น การจัดการต้นทุน ความอ่อนไหวของข้อมูล ความพร้อม และ ความสามารถภายในองค์กร และขอบเขตของโมเดล AI ที่ต้องการนำไปใช้ เอกสารนี้จะทำการสำรวจการวิเคราะห์การใช้งานหลักสามรูปแบบ ได้แก่ การจัดการอย่างสมบูรณ์ด้วย API, รูปแบบคลาวด์เซิร์ฟเวอร์ส่วนตัว และฮาร์ดแวร์ส่วนตัวภายในองค์กร โดยเน้นถึงผลกระทบของทั้ง 3 รุปแบบในมิติต่างๆ

ผู้นำระดับสูงจะได้รับความชัดเจนเกี่ยวกับการข้อดีข้อเสีย ในทางปฏิบัติ, ความช่วยเหลือในการแนะนำและการตัดสินใจที่ใช้ข้อมูลเกี่ยวกับสถาปัตยกรรม, ขนาด และการจัดสรรทรัพยากร ที่จำเป็นเพื่อที่จะสามารถใช้งาน GenAI ได้อย่างประสบความสำเร็จ

ซึ่ง เราได้ครอบคลุมฮาร์ดแวร์และตัวเลือกคลาวด์ปัจจุบันบางส่วนที่มีอยู่ในตลาดแล้วด้วย

*หมายเหตุ: เทคโนโลยีเกี่ยวกับ LLM และ GenAI กำลังพัฒนาอย่างรวดเร็ว นี่คือการวิเคราะห์ของเราในขณะที่เขียนเอกสารนี้

สถาปัตยกรรมหลัก – โซลูชันที่เป็นไปได้

การนำ GenAI มาใช้ในองค์กรต่างๆ มีความจำเป็นที่ต้อง มีสถาปัตยกรรมทางเทคนิคที่เหมาะสม ปัจจุบันมีตัวเลือกสถาปัตยกรรมหลักอยู่ 3 แบบ ได้แก่ การเข้าถึงโมเดล GenAI ผ่าน API, การโฮสต์โมเดล AI บนเซิร์ฟเวอร์ส่วนตัวเสมือน (บนคลาวด์) และการใช้โมเดล AI บนฮาร์ดแวร์ส่วนตัว (ภายในองค์กร)

เนื่องจากโมเดลแบบปิด เช่น OpenAI, Claude, Gemini, Grok ฯลฯ ไม่สามารถนำมาปรับใช้ในเครื่องส่วนบุคคลได้ ดังนั้น การนำ LLM ไปใช้บนเซิร์ฟเวอร์ในเครื่องหรือ VM ส่วนตัว จะเป็นการใช้งานโมเดลแบบเปิด เช่น Llama, DeepSeek, Mistral ฯลฯ

- การใช้งาน ผ่าน API เต็มรูปแบบ

องค์กรต่างๆ เชื่อมต่อกับโมเดล GenAI ที่โฮสต์ภายนอกผ่านการเขียนโปรแกรมแอปพลิเคชัน อินเทอร์เฟซ (API) วิธีนี้ใช้บริการบนคลาวด์ที่จัดการโดยผู้ให้บริการบุคคลที่สามทั้งหมด โดยแอปพลิเคชันขององค์กรจะโต้ตอบโดยตรงกับ API ภายนอก

- เซิร์ฟเวอร์ส่วนตัวเสมือน (อินสแตนซ์ส่วนตัวที่โฮสต์บนคลาวด์)

ในการกำหนดค่านี้ องค์กรต่างๆ จะใช้โมเดล GenAI ภายในเซิร์ฟเวอร์ส่วนตัวเสมือน (VPS) ที่จัดทำโดยผู้ให้บริการระบบคลาวด์ องค์กรต่างๆ จะจัดการอินสแตนซ์โมเดล การตั้งค่าโครงสร้างพื้นฐาน และการจัดสรรทรัพยากรของตนเองภายในสภาพแวดล้อมบนระบบคลาวด์

- การใช้งานฮาร์ดแวร์ส่วนตัว (ภายในองค์กร)

องค์กรต่างๆ ปรับใช้และดำเนินการโมเดล GenAI บนฮาร์ดแวร์ที่เป็นเจ้าของเอง และจัดการภายในศูนย์ข้อมูลหรือสถานที่ของตนเอง การกำหนดค่านี้รวมถึงการควบคุมภายในสำหรับฮาร์ดแวร์ ซอฟต์แวร์ และโครงสร้างพื้นฐานที่เกี่ยวข้องทั้งหมด

ข้อควรพิจารณาในการเลือกสถาปัตยกรรมที่เหมาะสม

การเลือกสถาปัตยกรรมที่เหมาะสมที่สุดสำหรับการใช้ GenAI ภายในองค์กรต้องพิจารณาถึงปัจจัยเชิงกลยุทธ์ต่างๆ มากมายอย่างสมดุล ตารางต่อไปนี้ได้สรุปปัจจัยสำคัญต่างๆ เช่น ต้นทุน ความปลอดภัยของข้อมูล ความต้องการในการบำรุงรักษา และความพร้อมใช้งานของโมเดล เพื่อเป็นแนวทางในการตัดสินใจเลือกใช้งานโครงสร่างการใช้งานหลักทั้งสามแบบ

ตารางที่ 1: ปัจจัยสำคัญที่เน้นย้ำ

|

ข้อควรพิจารณา

|

การใช้งาน ผ่าน API เต็มรูปแบบ

|

เซิร์ฟเวอร์ส่วนตัวเสมือน (โฮสต์บนคลาวด์)

|

ฮาร์ดแวร์ส่วนตัว (ภายในองค์กร) |

|---|---|---|---|

|

ค่าใช้จ่าย

|

โดยทั่วไปต้นทุนเริ่มต้นจะต่ำกว่า (จ่ายตามการใช้งาน); ปรับขนาดตามการใช้งาน; ต้นทุนระยะยาวอาจสูงขึ้น

|

ต้นทุนปานกลาง รวมถึงโครงสร้างพื้นฐานคลาวด์และค่าใช้จ่ายในการดำเนินงาน มีการลงทุนล่วงหน้าในระดับปานกลาง | การลงทุนเริ่มต้นที่สูง เนื่องจากการซื้อฮาร์ดแวร์และการติดตั้งโครงสร้างพื้นฐาน ซึ่งอาจมีค่าใช้จ่ายที่ต่ำลงเมื่อใช้งานในขนาดที่ใหญ่ขึ้น |

|

ความปลอดภัยของข้อมูล

|

ข้อมูลอยู่ภายนอกองค์กรความปลอดภัยได้รับการควบคุมโดยนโยบายของผู้ให้บริการ

|

การรักษาความปลอดภัยที่เพิ่มขึ้นภายในสภาพแวดล้อมส่วนตัวบนคลาวด์ มีการแยกและควบคุมที่ดีกว่า API สาธารณะ | ความปลอดภัยของข้อมูลในระดับสูงสุด ข้อมูลยังคงอยู่ภายในองค์กรอย่างสมบูรณ์ |

|

การซ่อมบำรุง

|

การบำรุงรักษาน้อยที่สุดผู้ให้บริการจัดการโครงสร้างพื้นฐานและอัปเดตโมเดล

|

การบำรุงรักษาระดับปานกลาง องค์กรต้องรับผิดชอบในการปรับใช้โมเดลและการจัดการโครงสร้างพื้นฐาน แต่ผู้จำหน่ายระบบคลาวด์เป็นผู้บำรุงรักษาฮาร์ดแวร์ | ภาระในการบำรุงรักษาสูงสุด องค์กรต้องรับผิดชอบฮาร์ดแวร์ โครงสร้างพื้นฐาน การปรับใช้โมเดล และการอัปเดตทั้งหมดด้วยตนเอง |

|

ความหลากหลายของ LLM

|

ตัวเลือกให้ใช้งานที่กว้างขวางที่สุด: รวมทั้งโมเดลแบบปิด (เช่น GPT-4, Gemini) ผ่าน API ของผู้ให้บริการ และแบบจำลองโอเพ่นซอร์ส (ผ่านทางผู้ให้บริการ API เช่น Together.ai)

|

จำกัดเฉพาะโมเดลโอเพนซอร์สหรือโมเดลที่ได้รับอนุญาต ให้โฮสต์ด้วยตนเอง (โมเดลแบบปิด เช่น GPT-4 หรือ Gemini มักจะไม่มีให้ใช้) | จำกัดเฉพาะโมเดลโอเพนซอร์สหรือโมเดลที่ได้รับอนุญาต ให้โฮสต์ด้วยตนเอง (โมเดลแบบปิด เช่น GPT-4 หรือ Gemini มักจะไม่มีให้ใช้) |

|

ระบบโดยรอบที่ใช้งานกับ AI

|

มีการเชื่อมต่อที่กว้างขว้าง ระบบอื่นๆ | ต้องใช้ความพยายามในการดำเนินการ | ต้องใช้ความพยายามในการดำเนินการ |

ต้นทุนรวมของการเป็นเจ้าของ (TCO)

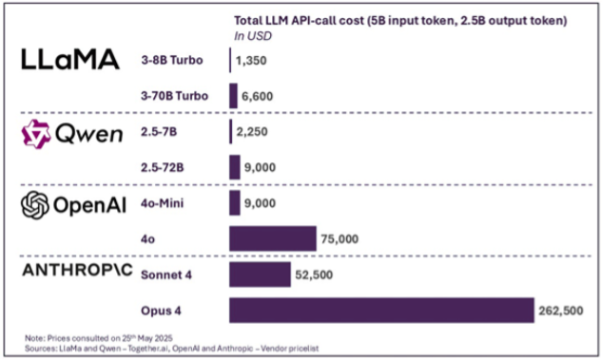

ต้นทุนของโครงการ GenAI แตกต่างกันอย่างมาก ทำให้การทำความเข้าใจปัจจัยที่ส่งผลต่อ TCO เป็นสิ่งสำคัญ ปัจจัยหลักประการหนึ่งคือต้นทุนการอนุมานที่เกี่ยวข้องกับการใช้ LLM ที่แตกต่างกัน

เพื่อแสดงสิ่งนี้ เราจำลองสถานการณ์ที่แอปพลิเคชันที่ใช้ API ประมวลผลโทเค็นอินพุต 5 พันล้านรายการและโทเค็นเอาต์พุต 2.5 พันล้านรายการต่อเดือน ซึ่งเทียบเท่ากับข้อความอินพุต 10 ล้านหน้าและข้อความเอาต์พุต 5 ล้านหน้า

ต้นทุนการอนุมานรายเดือนโดยประมาณนั้นแตกต่างกันอย่างมาก โดยเริ่มจาก 1,350 เหรียญสหรัฐสำหรับ LLM ที่ประหยัดที่สุดในการจำลองของเรา (LLaMa 3-8B) จนถึง 262,500 เหรียญสหรัฐสำหรับรุ่น Opus 4 แบบพรีเมียม

การเลือกโมเดลที่ถูกต้องสำหรับแต่ละงานจึงเป็นทักษะที่สำคัญในการบริหารจัดการต้นทุนอย่างมีประสิทธิภาพ

ภาคผนวก 1: ต้นทุนรวมของการเป็นเจ้าของ

อย่างไรก็ตาม ต้นทุนของโครงการ GenAI ไม่ได้ขึ้นอยู่กับการเลือกโมเดลเพียงอย่างเดียว แต่ยังมีความซับซ้อนเพิ่มขึ้นจากสถาปัตยกรรมพื้นฐาน โดยแต่ละสถาปัตยกรรมจะนำไปสู่ข้อควรพิจารณาต้นทุนที่แตกต่างกันซึ่งส่งผลต่อ TCO:

- บริการบนพื้นฐาน API:

โครงสร้างราคาที่เรียบง่ายและชัดเจน มีความซับซ้อนน้อยที่สุด - เซิร์ฟเวอร์ส่วนตัวเสมือน (โฮสต์บนคลาวด์):

ความซับซ้อนปานกลาง รวมไปถึงต้นทุนโครงสร้างพื้นฐานคลาวด์ที่กำหนดไว้ชัดเจนและค่าใช้จ่ายในการดำเนินการที่จัดการได้ - ฮาร์ดแวร์ส่วนตัว (ภายในสถานที่):

ความซับซ้อนสูงสุด มิติต้นทุนหลายด้าน (ฮาร์ดแวร์ โครงสร้างพื้นฐาน เจ้าหน้าที่ปฏิบัติการ ค่าใช้จ่ายเบื้องต้นด้านสิ่งอำนวยความสะดวก ค่าลิขสิทธิ์) ต้องมีการประเมินอย่างรอบคอบและการวางแผนระยะยาว

หมวดหมู่ต้นทุนที่กว้างในโครงร่างโซลูชันทั้งสามมีไฮไลต์ด้านล่างนี้

ตารางที่ 2 : หมวดหมู่ของต้นทุน

| ส่วนประกอบต้นทุน | การใช้งาน ผ่าน API เต็มรูปแบบ |

เซิร์ฟเวอร์ส่วนตัวเสมือน (โฮสต์บนคลาวด์)

|

ฮาร์ดแวร์ส่วนตัว (ภายในองค์กร) |

|---|---|---|---|

|

ต้นทุนการลงทุนเริ่มต้น

|

ค่าธรรมเนียมการตั้งค่าขั้นต่ำ ต้นทุนการรวมระบบครั้งแรก

|

การตั้งค่าโครงสร้างพื้นฐานคลาวด์ การกำหนดค่า และการบูรณาการเบื้องต้น

|

การจัดหาฮาร์ดแวร์ (GPU/CPU, เซิร์ฟเวอร์, ระบบเครือข่าย, ระบบจัดเก็บข้อมูล), ต้นทุนการตั้งค่าสิ่งอำนวยความสะดวก

|

|

ต้นทุนโครงสร้างพื้นฐาน (ต่อเนื่อง)

|

เลขที่โดยตรง(รวมอยู่ในค่าธรรมเนียมการใช้งาน)

|

ค่าบริการคลาวด์ (การประมวลผล, การเก็บข้อมูล, แบนด์วิดท์, การสำรองข้อมูล)

|

ต้นทุนการดำเนินงานศูนย์ข้อมูล ค่าไฟฟ้า ค่าทำความเย็น

และอสังหาริมทรัพย์

|

|

ต้นทุนการใช้งาน (การอนุมานแบบจำลอง)

|

ค่าธรรมเนียมการจ่ายต่อโทเค็น/การเรียก API

|

อินสแตนซ์การประมวลผลบนคลาวด์ (อัตราต่อชั่วโมงหรือรายเดือน)

|

ค่าเสื่อมราคาของฮาร์ดแวร์ การใช้พลังงาน

และคำนวณต้นทุนการจัดสรรทรัพยากร

|

|

ต้นทุนการอนุญาตใช้สิทธินางแบบ

|

รวมอยู่ในค่าธรรมเนียม API

|

สิทธิ์การใช้งานที่เป็นไปได้สำหรับรุ่นพรีเมี่ยมบางรุ่น

|

สิทธิ์การใช้งานที่เป็นไปได้สำหรับรุ่นพรีเมียมบางรุ่นหรือข้อตกลงการสนับสนุนโอเพนซอร์สเชิงพาณิชย์

|

|

ต้นทุนการฝึก/ปรับแต่งแบบจำลอง

|

โดยทั่วไปจะจ่ายตามการใช้งานหรือค่าธรรมเนียมการสมัครสมาชิกสำหรับการปรับแต่งบริการ

|

คำนวณต้นทุนของอินสแตนซ์การฝึกอบรมบนคลาวด์ ค่าธรรมเนียมการจัดเก็บข้อมูล

|

การใช้ฮาร์ดแวร์เพื่อการฝึกอบรม ไฟฟ้า และโครงสร้างพื้นฐานการจัดเก็บข้อมูลเฉพาะทาง

|

|

ต้นทุนโครงสร้างพื้นฐานซอฟต์แวร์

|

ขั้นต่ำ (มักเป็นส่วนหนึ่งของบริการ API หรือสแต็กองค์กร)

|

ใบอนุญาตระบบปฏิบัติการ ใบอนุญาตแพลตฟอร์มคอนเทนเนอร์/ออร์เคสตรา (ถ้ามี) เครื่องมือการจัดการซอฟต์แวร์

|

ใบอนุญาตระบบปฏิบัติการ ใบอนุญาตซอฟต์แวร์การจัดการ (VMware, Kubernetes/OpenShift, แพลตฟอร์มความปลอดภัย) เครื่องมือตรวจสอบ

|

|

ต้นทุนด้านความปลอดภัยและการปฏิบัติตาม

|

การปฏิบัติตามขั้นพื้นฐาน (มักรวมอยู่ด้วย) บริการเสริมที่เป็นทางเลือก

|

เครื่องมือการจัดการความปลอดภัยบนคลาวด์ บริการการจัดการการปฏิบัติตามข้อกำหนด และบริการควบคุมการเข้าถึง

|

ระบบรักษาความปลอดภัยภายในสถานที่ ความปลอดภัยของเครือข่าย การตรวจสอบการปฏิบัติตามข้อกำหนด การรับรอง

|

|

ค่าใช้จ่ายในการโอนข้อมูลและเครือข่าย

|

ค่าธรรมเนียมการร้องขอข้อมูล API มักจะน้อยมาก

|

ค่าบริการออกเครือข่ายคลาวด์ ค่าธรรมเนียมการโอนข้อมูลระหว่างภูมิภาค

|

การบำรุงรักษาโครงสร้างพื้นฐานเครือข่ายภายในสถานที่ การเชื่อมต่อ ISP การจัดการไฟเบอร์/เครือข่ายเฉพาะ

|

|

ต้นทุนการกู้คืนจากภัยพิบัติและการสำรองข้อมูล

|

โดยทั่วไปจะรวมอยู่หรือมีค่าธรรมเนียมเพิ่มเติมขั้นต่ำ

|

ค่าธรรมเนียมการสำรองข้อมูลและกู้คืนระบบจากภัยพิบัติบนคลาวด์

|

โครงสร้างพื้นฐานการสำรองข้อมูล การวางแผนการกู้คืนจากภัยพิบัติ และการสำรองข้อมูลนอกสถานที่

|

ข้อควรพิจารณาอื่น ๆ

ข้อควรพิจารณาเกี่ยวกับการรักษาความปลอดภัยข้อมูล

ในขณะที่องค์กรส่วนใหญ่จัดการข้อมูลบางส่วนที่ละเอียดอ่อน แต่ภาคส่วนบางแห่งก็เผชิญกับข้อกังวลด้านความเป็นส่วนตัวที่แตกต่างกันด้วย AI เชิงสร้างสรรค์ ตัวอย่างเช่น:

- ขายปลีก: ผู้ค้าปลีกต้องเผชิญกับความกังวลอย่างยิ่งเกี่ยวกับการปกป้องข้อมูลลูกค้า โดยเฉพาะอย่างยิ่งหากพวกเขาใช้ AI เพื่อวิเคราะห์ข้อมูลประชากร การแบ่งกลุ่มลูกค้า และการปรับแต่งส่วนบุคคล หากพวกเขาไม่มีเจตนาที่จะปกปิดข้อมูลลูกค้าในสภาพแวดล้อมคลาวด์อย่างเพียงพอ พวกเขาควรดำเนินการเพื่อให้แน่ใจว่าข้อมูลได้รับการจัดเก็บอย่างปลอดภัย

- การดูแลสุขภาพ: ข้อกังวลสำคัญ ได้แก่ การเปิดเผยข้อมูลสุขภาพที่ได้รับการคุ้มครอง (PHI) โดยไม่ได้ตั้งใจ การปฏิบัติตามกฎระเบียบที่เข้มงวดเช่น HIPAA และการรับรองความถูกต้องเพื่อหลีกเลี่ยงข้อผิดพลาดที่เป็นอันตราย

- การเงิน: สถาบันการเงินให้ความสำคัญกับการปกป้องข้อมูลที่ละเอียดอ่อนของลูกค้าและข้อมูลที่เป็นกรรมสิทธิ์ การปฏิบัติตามกฎระเบียบ และการป้องกันการรั่วไหลหรือการใช้ข้อมูลในทางที่ผิด

- รัฐบาล: หน่วยงานภาครัฐให้ความสำคัญกับอธิปไตยทางข้อมูล ความมั่นคงของชาติ การปฏิบัติตามกฎหมายความเป็นส่วนตัวที่เข้มงวด และการป้องกันการเปิดเผยข้อมูลลับของพลเมืองโดยไม่ได้ตั้งใจ

- ถูกกฎหมาย: สำนักงานกฎหมายต้องเผชิญกับความกังวลสำคัญเกี่ยวกับการรักษาความลับระหว่างทนายความกับลูกความ การปกป้องข้อมูลคดีที่มีเอกสิทธิ์ การปฏิบัติตามมาตรฐานจริยธรรมวิชาชีพ และการหลีกเลี่ยงการเปิดเผยข้อมูลโดยไม่ได้ตั้งใจผ่านบริการ AI ภายนอก

เนื่องจากข้อกำหนดด้านกฎระเบียบข้อมูลและต้นทุนจากการไม่ปฏิบัติตามข้อกำหนดในแต่ละภูมิภาคมีแนวโน้มเพิ่มขึ้น บริษัทต่างๆ จึงจำเป็นต้องเปิดเผยข้อมูลให้คณะกรรมการบริษัท ผู้ถือหุ้น และลูกค้าทราบ ดังนั้น การตัดสินใจว่าจะจัดเก็บข้อมูลไว้ที่ใดและใครมีสิทธิ์เข้าถึงจึงกลายเป็นจุดตัดสินใจสำคัญสำหรับโมเดลสถาปัตยกรรม genAI ที่เลือกใช้

ปัจจุบัน ความปลอดภัยของข้อมูลครอบคลุมถึงความปลอดภัยของหลักสูตร LLM เนื่องจากความเสี่ยงของหลักสูตร LLM อยู่ที่วิธีการใช้ข้อมูลเพื่อจุดประสงค์ในการฝึกอบรม อย่างไรก็ตาม เมื่อหลักสูตร LLM ได้รับความนิยมมากขึ้น ก็จะมีกฎระเบียบเฉพาะสำหรับหลักสูตร LLM มากขึ้น

โดยสรุป ทีมงานด้านสถาปัตยกรรม ความปลอดภัย และความเสี่ยงขององค์กร/บริษัทจะต้องพิจารณาปัจจัยเหล่านี้ก่อนตัดสินใจเกี่ยวกับสถาปัตยกรรม

การพิจารณาความสามารถของทีม

การใช้ LLM แบบภายในองค์กรช่วยให้องค์กรสามารถควบคุมและรักษาความปลอดภัยข้อมูลได้มากขึ้น แต่ต้องมีความเชี่ยวชาญด้านชุดความสามารถที่แตกต่างกันเมื่อเทียบกับบริการที่ใช้ API

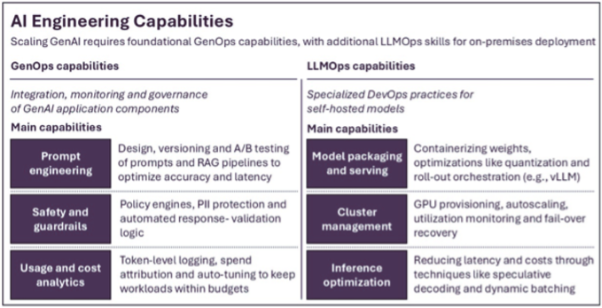

ไม่ว่าจะเลือกสถาปัตยกรรมใด ความสามารถพื้นฐานของ AI จะต้องได้รับการพัฒนาเมื่อปรับขนาดแอปพลิเคชัน GenAI ความสามารถเหล่านี้เรียกว่า GenOps และเกี่ยวข้องกับการรวมและเพิ่มประสิทธิภาพของส่วนประกอบแอปพลิเคชัน GenAI เช่น วิศวกรรมที่รวดเร็ว แนวทางป้องกัน และการวิเคราะห์ต้นทุน

การเลือกสถาปัตยกรรมภายในองค์กรนั้นจำเป็นต้องมีการพัฒนาความสามารถที่เรียกว่า LLMOps ทักษะเหล่านี้ครอบคลุมความสามารถที่แตกต่างกัน เช่น การให้บริการโมเดลขั้นสูง การจัดการโครงสร้างพื้นฐาน GPU และการเพิ่มประสิทธิภาพโครงสร้างพื้นฐาน สิ่งสำคัญคือ ความสามารถของ LLMOps ไม่ใช่แค่ส่วนขยายของทักษะ GenOps เท่านั้น ทักษะ LLMOps ต้องการความเชี่ยวชาญเชิงลึกที่ใกล้ชิดกับทีมแพลตฟอร์ม ในขณะที่ทีม GenOps จะต้องใกล้ชิดกับวิศวกรแอปพลิเคชันมากขึ้น ดังนั้น องค์กรที่ตัดสินใจใช้โมเดลโอเพ่นซอร์สภายในองค์กรจะต้องพัฒนาความสามารถและทีมงาน AI เพิ่มเติม

ภาพด้านล่างนี้ขยายความความสามารถที่จำเป็นสำหรับทั้ง GenOps และ LLMOps

ภาคผนวก 2: ความสามารถด้านวิศวกรรม AI

ข้อควรพิจารณาเกี่ยวกับความพร้อมใช้งานของโมเดล LLM

โดยทั่วไปแล้วการเลือกใช้โมเดลที่พร้อมใช้งานสำหรับการใช้งานภายในองค์กรนั้นจำกัดมากกว่าเมื่อเทียบกับโซลูชัน API บนคลาวด์ แม้ว่าผู้ให้บริการ API บนคลาวด์จะเสนอการเข้าถึงโมเดลที่เป็นกรรมสิทธิ์ (เช่น ซีรีส์ GPT ของ OpenAI หรือ Gemini ของ Google) และโมเดลโอเพ่นซอร์สมากมายทันทีผ่านบริการ API ของบุคคลที่สาม แต่การใช้งานภายในองค์กรมักจะพึ่งพาเฉพาะโมเดลโอเพ่นซอร์สหรือโมเดลที่ได้รับอนุญาตเชิงพาณิชย์ซึ่งมีน้ำหนักที่เปิดให้ใช้งานสาธารณะ โมเดลที่เป็นกรรมสิทธิ์ซึ่งโดยทั่วไปถือว่าทันสมัยนั้นมักจะไม่สามารถโฮสต์ภายในองค์กรได้เนื่องจากข้อจำกัดด้านใบอนุญาตและนโยบายของผู้ให้บริการ ดังนั้น องค์กรที่ใช้แนวทางภายในองค์กรอาจต้องเผชิญกับตัวเลือกโมเดลที่แคบลง ซึ่งอาจส่งผลต่อความยืดหยุ่นและความสามารถ

อย่างไรก็ตาม อัตราการเปลี่ยนแปลงในตลาด LLM นั้นรวดเร็วมาก และเมื่อมีโมเดลโอเพนซอร์สใหม่ๆ ออกมาและมีการพัฒนาโมเดลที่มีอยู่เพิ่มมากขึ้น ช่องว่างดังกล่าวอาจลดลงเมื่อเวลาผ่านไป ด้วยสถาปัตยกรรมที่เหมาะสม ความสามารถในการสลับ/อัปเดตโมเดลสามารถจัดการได้เพื่อหลีกเลี่ยงการใช้งานซ้ำ

ตารางต่อไปนี้ครอบคลุมโมเดลสามประเภท ได้แก่ โมเดลประสิทธิภาพสูง โมเดลระยะกลาง และโมเดลภาษาขนาดเล็ก พารามิเตอร์แสดงถึงขนาดของโมเดล ผู้พัฒนาคือสถาบันที่พัฒนาโมเดลเริ่มต้น และฟีเจอร์หลักแสดงถึงฟีเจอร์สำคัญของโมเดล

ตารางที่ 3: รุ่นที่มีประสิทธิภาพสูง (ดีที่สุดสำหรับเซิร์ฟเวอร์ขนาดเล็ก)

|

แบบอย่าง

|

พารามิเตอร์ |

ผู้พัฒนา

|

คุณสมบัติหลัก

|

|---|---|---|---|

|

ลามะ 3 8B

|

8 พันล้าน

|

เมต้า

|

โมเดลขนาดกะทัดรัดที่สร้างสมดุลระหว่างประสิทธิภาพและการใช้ทรัพยากร

|

|

มิสทรัล 7บี

|

7พันล้าน

|

มิสทรัล เอไอ

|

อัตราส่วนประสิทธิภาพต่อขนาดที่ยอดเยี่ยม เหมาะสำหรับงานเขียนโค้ด

|

|

ฟี-3 มินิ

|

3.8 พันล้าน

|

ไมโครซอฟต์

|

โมเดลขนาดเล็กแต่มีประสิทธิภาพ

|

|

ลามะตัวจิ๋ว

|

1.1 พันล้าน

|

ผู้ร่วมให้ข้อมูลหลากหลาย

|

ตัวเลือกน้ำหนักเบามากสำหรับงานพื้นฐาน

|

|

เจมมา

|

2บี/7บี

|

Google

|

โมเดลที่มีประสิทธิภาพพร้อมคำแนะนำที่ดี

|

ตาราง 4: รุ่นระดับกลาง (ข้อกำหนดเซิร์ฟเวอร์ขนาดเล็กปานกลาง)

|

แบบอย่าง

|

พารามิเตอร์ |

ผู้พัฒนา

|

คุณสมบัติหลัก

|

|---|---|---|---|

|

ลามะ 3 8B

|

70พันล้าน

|

เมต้า

|

รุ่นใหญ่ขึ้นด้วยความสามารถที่แข็งแกร่งยิ่งขึ้น

|

|

มิสทรัล 7บี

|

~45 พันล้านบาท มีผล

|

มิสทรัล เอไอ

|

สถาปัตยกรรมผสมผสานของผู้เชี่ยวชาญที่มีประสิทธิภาพแข็งแกร่ง

|

|

ฟี-3 มินิ

|

13 พันล้าน

|

ไมโครซอฟต์

|

เวอร์ชันปรับแต่งพร้อมคำแนะนำการปฏิบัติตามที่ดีขึ้น

|

|

ลามะตัวจิ๋ว

|

40พันล้าน

|

ผู้ร่วมให้ข้อมูลหลากหลาย

|

โมเดลเปิดอันทรงพลังพร้อมความรู้ที่กว้างขวาง

|

|

เจมมา

|

7บี/13บี

|

Google

|

เฉพาะสำหรับงานด้านการเขียนโปรแกรม

|

โมเดล SML (ข้อกำหนดเซิร์ฟเวอร์ขนาดเล็กปานกลาง)

Small Model Languages (SML) กำลังได้รับความนิยมสำหรับกรณีการใช้งานเฉพาะ เนื่องจากภาษาเหล่านี้ใช้งานง่ายกว่าและทำงานได้ดีสำหรับกรณีการใช้งานเฉพาะ เมื่อภาษาเหล่านี้มีความก้าวหน้ามากขึ้น ภาษาเหล่านี้อาจเหมาะกับการใช้งานบนอุปกรณ์ EDGE หรือกรณีการใช้งานเฉพาะเจาะจงมากกว่า

ตารางที่ 5: โมเดล SML (ข้อกำหนดเซิร์ฟเวอร์ขนาดเล็กปานกลาง)

| แบบอย่าง | ผู้พัฒนา | คุณสมบัติหลัก |

|---|---|---|

|

โมบายBERT

|

Google, มหาวิทยาลัยคาร์เนกีเมลลอน

|

แบบจำลอง BERT แบบบีบอัดสำหรับอุปกรณ์พกพา

|

|

ดิสทิลเบิร์ต

|

กอดหน้า

|

BERT เวอร์ชันที่เบากว่าและเร็วกว่าพร้อมการลดขนาด 40%

|

|

เบิร์ต-ไทนี่

|

Google

|

รุ่นที่เล็กมากมีพารามิเตอร์เพียง 4.4 ล้านรายการ

|

|

GPT2-ขนาดเล็ก

|

เปิด AI

|

GPT-2 รุ่นเล็กสุดที่พารามิเตอร์ 124 ล้าน

|

|

FLAN-T5 เล็ก

|

Google

|

โมเดลที่ปรับแต่งคำสั่งแบบกะทัดรัดของ Google

|

แบบจำลองที่กล่าวถึงข้างต้นเป็นแบบจำลอง ณ เวลาที่เขียนบทความนี้ ซึ่งเป็นพื้นที่ที่มีการพัฒนาอย่างรวดเร็วและจำเป็นต้องได้รับการตรวจสอบอย่างใกล้ชิด

การเลือกแบบจำลองและความต้องการโครงสร้างพื้นฐาน

การเลือก LLM ส่งผลโดยตรงต่อความต้องการและต้นทุนโครงสร้างพื้นฐาน ต้นทุนและประสิทธิภาพของโครงสร้างพื้นฐานขึ้นอยู่กับพารามิเตอร์ที่หลากหลาย เช่น หน่วยความจำ GPU พลังการประมวลผล (Tflops) และแบนด์วิดท์หน่วยความจำ แม้ว่าการคำนวณต้นทุนเหล่านี้อย่างครอบคลุมจะเกินขอบเขตของเอกสารฉบับนี้ แต่เราจะหารือโดยย่อว่าการเลือก LLM ส่งผลต่อความต้องการหน่วยความจำ GPU อย่างไร เนื่องจากเป็นปัจจัยหลักประการหนึ่งของต้นทุนโครงสร้างพื้นฐาน

มีกฎง่ายๆ ในการคำนวณความต้องการ RAM ของ GPU โดยอิงตามจำนวนพารามิเตอร์ของ LLM สำหรับการอนุมาน จำเป็นต้องใช้ RAM 2 ไบต์สำหรับแต่ละพารามิเตอร์ โดยมี 20% เพิ่มเติมสำหรับโอเวอร์เฮด มาลองใช้กับโมเดล LLama ที่เล็กที่สุดและใหญ่ที่สุดที่มีอยู่:

- Llama 3.1 – 405B ต้องมีการลงทุนใน GPU มูลค่า 280,000 เหรียญสหรัฐ โมเดลนี้ต้องการ RAM 972 GB การตั้งค่า GPU NVIDIA H200 8x (141 GB ต่อตัว) ให้ความจุเพียงพอสำหรับการทำงานอนุมานบนโมเดล ด้วยต้นทุน 35,000 ดอลลาร์สหรัฐต่อ GPU ส่งผลให้ได้ 280,000 ดอลลาร์สหรัฐ

- TinyLlama ต้องลงทุน GPU เพียง 300 เหรียญสหรัฐเท่านั้นด้วยพารามิเตอร์ 1.1 พันล้าน จำเป็นต้องใช้ RAM 2.64 GB NVIDIA RTX 4060 ที่มี RAM 8 GB รองรับความต้องการนี้ได้อย่างสบายๆ ในราคาประมาณ 300 USD

การเปรียบเทียบนี้เน้นย้ำถึงผลกระทบที่การเลือกหลักสูตร LLM มีต่อโครงสร้างพื้นฐานและต้นทุน เมื่อองค์กรตัดสินใจที่จะปรับใช้โครงสร้างพื้นฐาน GenAI ในสถานที่ สิ่งสำคัญคือต้องระบุหลักสูตร LLM ที่ตรงกับความต้องการของตนพร้อมทั้งควบคุมต้นทุนด้วย

การเริ่มต้น – จากโครงการนำร่องสู่การขยายขนาด

หลายกลุ่มเริ่มต้นเส้นทางสู่ GenAI โดยไม่มีเป้าหมายที่ชัดเจน พวกเขาอยู่ในโหมดสำรวจ มีแนวคิดเกี่ยวกับกรณีศึกษาทางธุรกิจ หรือใช้โซลูชันที่มีอยู่แล้วสำหรับการบริโภคขององค์กร แต่ยังต้องการ "ดูว่าเป็นอย่างไร" ก่อนที่จะตัดสินใจลงทุนใน genAI อย่างเต็มรูปแบบ

แม้ว่ากลุ่มจะอยู่ในโหมด "ดูว่าจะเป็นอย่างไร" พวกเขาก็ควรเข้าใจว่าแผนงานสำหรับสถาปัตยกรรม genAI ที่ขยายขนาดคืออะไร เพราะสิ่งนี้จะช่วยในการลงทุนและแนวทางเบื้องต้น

สถานที่ที่ง่ายที่สุดในการเริ่มต้นคือพันธมิตรคลาวด์หรือตัวเลือกฮาร์ดแวร์ภายในสถานที่หากข้อมูลถือว่ามีความละเอียดอ่อนสูง

สำหรับการใช้งานบนระบบคลาวด์ ถือเป็นกระบวนการจัดหาและดำเนินการตามกรณีของผู้ใช้ที่ค่อนข้างตรงไปตรงมา

สำหรับตัวเลือกฮาร์ดแวร์ ควรพิจารณาถึงการเติบโตในอนาคตของการใช้งาน ในกรณีนี้ ให้พิจารณาการเติบโตที่เป็นไปได้จากเครื่อง POC เพียงเครื่องเดียวเป็นสถาปัตยกรรมแบบขยายขนาด 3 ชั้น หากจำเป็น (เซิร์ฟเวอร์ GPU เซิร์ฟเวอร์แอปพลิเคชัน เซิร์ฟเวอร์ข้อมูล)

เพื่อจุดประสงค์ในการให้ตัวอย่างแนวทางของผู้จำหน่าย ให้เราตรวจสอบโซลูชัน Oracle LLM ตัวเลือกคลาวด์ และตัวเลือกฮาร์ดแวร์ภายในองค์กร

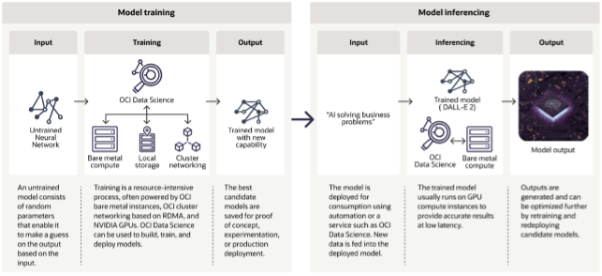

รายละเอียดต่อไปนี้แสดงกระบวนการแบบจำลอง LLM ที่มีอยู่ในคลาวด์ Oracle OCI

ภาคผนวก 3: กระบวนการจำลอง LLM พร้อมใช้งานในระบบคลาวด์ Oracle OCI

ภาคผนวก 4: ตัวเลือกคลาวด์โมเดล LLM

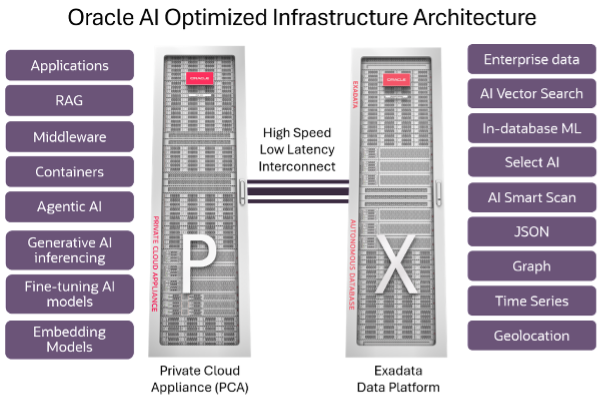

ในแง่ของตัวเลือกภายในองค์กร Oracle มีตัวเลือกคลาวด์ส่วนตัวสำหรับทั้งเซิร์ฟเวอร์แอปพลิเคชัน เซิร์ฟเวอร์ข้อมูล และเซิร์ฟเวอร์ GPU

ภาคผนวก 5: สถาปัตยกรรมโครงสร้างพื้นฐานที่ได้รับการเพิ่มประสิทธิภาพด้วย Oracle AI

คำอธิบายบางส่วน:

- ความสามารถในการสนับสนุน - หากเลือกรูปแบบการปรับใช้แบบภายในสถานที่ ควรพิจารณาถึงรูปแบบการสนับสนุน เช่น LLM Ops เช่น ทรัพยากร ความล่าช้า การอัปเกรด ฯลฯ

- ข้อควรพิจารณาเกี่ยวกับ GenAI OPs ไม่ว่าจะเลือกตัวเลือกใด เลเยอร์แอปพลิเคชันควรพิจารณา GenAI Ops เพื่อจัดการแอปพลิเคชัน วิธีนี้จะช่วยให้มีรากฐานและการจัดการที่มั่นคงสำหรับการเติบโตในอนาคต

- ความปลอดภัย/ความเป็นส่วนตัว – หากภาคส่วนต่างๆ มีกฎระเบียบควบคุมอย่างเข้มงวด เช่น ทางการแพทย์ กฎหมาย เป็นต้น องค์กรควรพิจารณาจัดตั้งทีมสำหรับ LLMOps โดยมีตัวเลือกในการเริ่มต้นด้วยการปรับใช้แบบ On-Prem วิธีนี้จะช่วยให้ทีมสามารถออกแบบการปรับใช้เพื่อให้สามารถปรับขนาดการดำเนินงานได้ในขณะที่รักษาความปลอดภัยของข้อมูล

ผู้ประเมินกรณีการใช้งาน

มีกรณีการใช้งานหลักสามประเภทที่เราต้องพิจารณาเมื่อตัดสินใจว่าจะใช้ LLM ประเภทใด

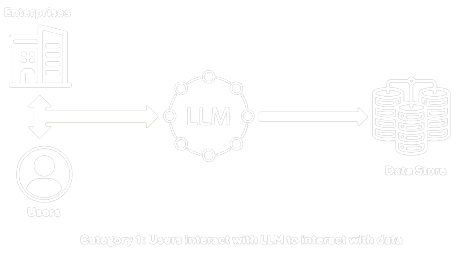

- หมวดที่ 1 – การใช้ LLM เพื่อโต้ตอบกับข้อมูล: จะใช้ในกรณีการใช้งานที่ใช้ LLM เพื่อโต้ตอบกับข้อมูลเท่านั้น LLM เหล่านี้สามารถผ่านการฝึกอบรมล่วงหน้าได้และไม่ต้องใช้พารามิเตอร์มากมาย พารามิเตอร์เหล่านี้สามารถนำไปใช้งานในเครื่องขนาดเล็กในสถานที่เฉพาะได้โดยใช้ Open Model เครื่องสามารถปรับขนาดให้รวม CPU/GPU หลายตัวหรือปรับใช้บน VM ส่วนตัวได้ ถือเป็นตัวเลือกที่ดีสำหรับการใช้งานในสถานที่ที่ความปลอดภัยของข้อมูล

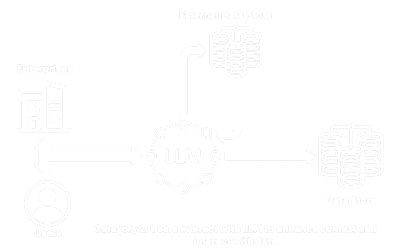

- หมวดที่ 2 – การใช้ LLM เพื่อปรับปรุงบริบทก่อนโต้ตอบกับข้อมูล: หมวดนี้จะใช้การเรียก API ไปยัง LLM ขนาดใหญ่กว่าเพื่อให้ได้บริบทที่ดีขึ้นแล้วจึงโต้ตอบกับข้อมูล ซึ่งสามารถใช้ได้เมื่อพารามิเตอร์มีขอบเขตกว้างขึ้นและไม่ใช่การโต้ตอบแบบง่ายๆ กับผู้ใช้ ปัญหาความเป็นส่วนตัวจำเป็นต้องได้รับการแก้ไขโดยให้แน่ใจว่าไม่มีการส่งข้อมูลที่ละเอียดอ่อนผ่านการเรียก API การเรียก API ควรใช้เพื่อปรับปรุงบริบทเท่านั้น ไม่ใช่โต้ตอบกับข้อมูลที่ละเอียดอ่อน

- หมวดที่ 3 – ตัวแทน AI ที่ใช้ LLM เพื่อโต้ตอบกับข้อมูล: หมวดนี้คล้ายกับหมวดที่ 2 อย่างไรก็ตาม ผู้ใช้/แอปพลิเคชันขององค์กรจะโต้ตอบกับตัวแทน AI ตัวแทน AI จะกำหนดแนวทางการดำเนินการซึ่งอาจเป็น LLM เพื่อปรับปรุงบริบทก่อนที่จะโต้ตอบกับข้อมูล

ในทั้งสามหมวดหมู่นี้ ข้อมูลที่ละเอียดอ่อนจะอยู่ในเครือข่ายที่ปลอดภัย และข้อมูลนี้จะไม่ถูกใช้เพื่อการฝึกอบรมโมเดล

หลักสูตร LLM ประเภทแรกนั้นง่ายกว่าและถูกกว่าในการเริ่มต้น แต่เหมาะสำหรับกรณีการใช้งานเฉพาะ การฝึกอบรมยังทำสำหรับกรณีการใช้งานเฉพาะด้วย ดังนั้น จำนวนพารามิเตอร์ที่ใช้สร้างหลักสูตร LLM จึงมีจำกัด อย่างไรก็ตาม หลักสูตรเหล่านี้สามารถใช้งานได้ดีมากสำหรับกรณีการใช้งานที่ได้รับการฝึกอบรม

ประเภทที่สองใช้การผสมผสานระหว่าง LLM ขนาดเล็กที่จำกัดเฉพาะพื้นที่ซึ่งนำไปใช้งานภายในเครือข่าย และใช้ LLM ขนาดใหญ่ผ่านการเรียกใช้ API เพื่อปรับปรุงบริบท ต้นทุนจะสูงขึ้นตามจำนวนผู้ใช้ การเรียกใช้ API เป็นต้น นอกจากนี้ยังให้ความยืดหยุ่นอย่างมากในการปรับปรุงกรณีการใช้งานที่ง่ายขึ้นเมื่อฐานผู้ใช้และความซับซ้อนเพิ่มขึ้น

สรุป

การเลือกสถาปัตยกรรมการใช้งาน GenAI ที่เหมาะสมที่สุดถือเป็นการลงทุนเชิงกลยุทธ์ที่สมดุลระหว่างความต้องการในการดำเนินงานในทันทีกับวัตถุประสงค์การเปลี่ยนแปลงทางดิจิทัลในระยะยาว โดยการประเมินข้อดีและข้อจำกัดที่แตกต่างกันของบริการ API ที่ได้รับการจัดการ เซิร์ฟเวอร์ส่วนตัวเสมือนที่โฮสต์บนคลาวด์ และโซลูชันฮาร์ดแวร์ภายในองค์กร องค์กรต่างๆ สามารถปรับตัวเลือกโครงสร้างพื้นฐานให้สอดคล้องกับความต้องการเฉพาะของตนในด้านประสิทธิภาพด้านต้นทุน การกำกับดูแลข้อมูล ความสามารถขององค์กร และความยืดหยุ่นของแบบจำลอง เมื่อภูมิทัศน์ของ GenAI ยังคงพัฒนาต่อไป ผู้ตัดสินใจที่มีความเข้าใจที่ชัดเจนเกี่ยวกับการแลกเปลี่ยนทางสถาปัตยกรรมเหล่านี้จะอยู่ในตำแหน่งที่ดีกว่าในการลงทุนอย่างมีข้อมูลในโครงสร้างพื้นฐาน บุคลากร และพันธมิตร

การจัดแนวทางเชิงกลยุทธ์นี้ช่วยให้การนำ AI ไปใช้ไม่เพียงแต่แก้ไขปัญหาทางธุรกิจในปัจจุบันเท่านั้น แต่ยังสร้างรากฐานที่ปรับขนาดได้ซึ่งสามารถปรับให้เข้ากับเทคโนโลยีใหม่ๆ และความต้องการของตลาดที่เปลี่ยนแปลงไป ตัวเลือกฮาร์ดแวร์และคลาวด์ที่มีรายละเอียดในเอกสารนี้เป็นจุดเริ่มต้นสำหรับการเดินทางครั้งนี้ โดยนำเสนอเส้นทางที่ใช้งานได้จริงสู่การบูรณาการ AI ขององค์กรที่ประสบความสำเร็จไม่ว่าองค์กรจะมีขนาดเท่าใดหรือมีความพร้อมทางเทคนิคแค่ไหน

เกี่ยวกับผู้เขียน

หากต้องการข้อมูลหรือขออนุญาตพิมพ์ซ้ำ กรุณาติดต่อ พร้อม ที่ [email protected] หรือ ฟิวชั่นอาร์ค ที่ [email protected]

เพื่อค้นหาข้อมูลล่าสุด พร้อม and ฟิวชั่นอาร์ค อัปเดตและเนื้อหาเยี่ยมชมเราที่ readyms.com และ archfusion.ai

ติดตาม พร้อม and ฟิวชั่นอาร์ค บน LinkedIn

© เรดดี้ แมเนจเมนท์ โซลูชั่น 2025. สงวนลิขสิทธิ์

© ArcFusion 2025 สงวนลิขสิทธิ์.

เกี่ยวกับ Ready

Ready เป็นบริษัทที่ปรึกษาที่มุ่งมั่นในการมอบโซลูชันนวัตกรรมเพื่อตอบสนองความต้องการด้านปฏิบัติการและเทคโนโลยี ด้วยการเน้นที่กลยุทธ์ ระบบอัตโนมัติ และการเปิดใช้งาน Ready จึงมีความเชี่ยวชาญในการเสนอโซลูชันที่มองการณ์ไกลสำหรับลูกค้ายุคใหม่ ด้วยการดำเนินงานในสหรัฐอเมริกา ฟิลิปปินส์ ออสเตรเลีย และไทย และมีแผนที่จะขยายธุรกิจต่อไป Ready พร้อมที่จะก้าวขึ้นเป็นกำลังสำคัญระดับโลกในโลกแห่งการให้คำปรึกษา

แบ่งปัน